When you work with frameworks such as ASP.NET or Django, ORM (Entity Framework, Django ORM) magically creates migration, and with DynamoDB, it may seem strange that you need to write migrations by yourself. But it is the price of using NoSQL and rejecting ORMs, but don't worry, managing migrations by yourself isn't that scary.

Current State

Before we jump into migrations, I want to show you how we know the current state of tables.

| const TABLE_NAME_POSTFIX = `_${process.env.ENV}` | |

| const TABLES_NAMES = { | |

| users: `users_${process.env.ENV}`, | |

| projects: `projects_${process.env.ENV}`, | |

| // we will use it know the index of last migration | |

| projects: `management_${process.env.ENV}`, | |

| // other tables names ... | |

| } | |

| const TABLES_PARAMS = [ | |

| tableParams(TABLES_NAMES.users, 'id'), | |

| tableParams(TABLES_NAMES.projects, 'id'), | |

| tableParams(TABLES_NAMES.management, 'id'), | |

| // other tables params ... | |

| ] |

The function that turns the table name and primary key into parameters for DynamoDB comes from this tiny library with common utils.

Create and Delete Tables

First, we want to delete tables we don't need anymore and create new ones. To do this, we compare the existing DynamoDB tables' names with our tables parameters. If there is a mismatch, we execute appropriate operations to delete and create tables accordingly to the current state.

| const database = new AWS.DynamoDB() | |

| const neededTables = TABLES_PARAMS.map(({ TableName }) => TableName) | |

| const existingTables = await database | |

| .listTables() | |

| .promise() | |

| .then(data => data.TableNames) | |

| const dbsToDelete = _.without(existingTables, ...neededTables) | |

| const dbsToCreate = _.without(neededTables, ...existingTables) | |

| await Promise.all( | |

| dbsToDelete.map(TableName => | |

| database.deleteTable({ TableName }).promise() | |

| ) | |

| ) | |

| await Promise.all( | |

| dbsToCreate.map(TableName => | |

| database | |

| .createTable(TABLES_PARAMS.find(t => t.TableName === TableName)) | |

| .promise() | |

| ) | |

| ) |

Running Migrations

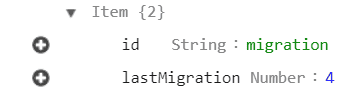

To know which migration was executed last time, we have the table with migration information. It looks like this:

All our migrations are simply an array of asynchronous functions that perform operations on tables items.

| const aws = require('aws-sdk') | |

| module.exports = [ | |

| async () => {...}, | |

| async () => {...}, | |

| async () => {...}, | |

| async () => { | |

| const documentClient = new aws.DynamoDB.DocumentClient() | |

| const projects = await documentClient | |

| .scan({ | |

| TableName: 'Projects', | |

| ProjectionExpression: 'id', | |

| FilterExpression: 'attribute_not_exists(creationTime)' | |

| }) | |

| .promise() | |

| .then(({ Items }) => Items) | |

| return Promise.all( | |

| projects.map(({ id }) => | |

| documentClient | |

| .update({ | |

| TableName: 'Projects', | |

| Key: { id }, | |

| UpdateExpression: 'set creationTime = :creationTime', | |

| ExpressionAttributeValues: { | |

| ':creationTime': Date.now() | |

| } | |

| }) | |

| .promise() | |

| ) | |

| ) | |

| }, | |

| async () => {...}, | |

| async () => {...}, | |

| ] |

To run these migrations, we take the index of the last executed one and run all migrations, starting from the index. If there was no migration item in the management table, it means that tables are empty, and there is no need to run migrations.

| const documentClient = new AWS.DynamoDB.DocumentClient() | |

| const migration = await documentClient | |

| .get({ | |

| TableName: 'Management', | |

| Key: { id: 'migration' } | |

| }) | |

| .promise() | |

| .then(({ Item }) => Item) | |

| if ( | |

| migration.lastMigration !== undefined && | |

| migration.lastMigration < migrations.length | |

| ) { | |

| const migrationsToRun = migrations.slice(migration.lastMigration + 1) | |

| await Promise.all(migrationsToRun.map(migration => migration())) | |

| } | |

| await documentClient | |

| .put({ | |

| TableName: 'Management', | |

| Item: { | |

| id: 'migration', | |

| lastMigration: migrations.length - 1 | |

| } | |

| }) | |

| .promise() |

Finally, we will have a script for database preparation. We can run it automatically in our CI/CD pipeline before deploying the new version of the back-end.

| require('dotenv').config() | |

| require('../src/utils/aws').setupAWS() | |

| const _ = require('lodash') | |

| const AWS = require('aws-sdk') | |

| const { TABLES_PARAMS } = require('../src/constants/db') | |

| const migrations = require('../src/migrations') | |

| const prepareDB = async () => { | |

| const database = new AWS.DynamoDB() | |

| const neededTables = TABLES_PARAMS.map(({ TableName }) => TableName) | |

| const existingTables = await database | |

| .listTables() | |

| .promise() | |

| .then(data => data.TableNames) | |

| const dbsToDelete = _.without(existingTables, ...neededTables) | |

| const dbsToCreate = _.without(neededTables, ...existingTables) | |

| await Promise.all( | |

| dbsToDelete.map(TableName => | |

| database.deleteTable({ TableName }).promise() | |

| ) | |

| ) | |

| await Promise.all( | |

| dbsToCreate.map(TableName => | |

| database | |

| .createTable(TABLES_PARAMS.find(t => t.TableName === TableName)) | |

| .promise() | |

| ) | |

| ) | |

| const documentClient = new AWS.DynamoDB.DocumentClient() | |

| const migration = await documentClient | |

| .get({ | |

| TableName: 'Management', | |

| Key: { id: 'migration' } | |

| }) | |

| .promise() | |

| .then(({ Item }) => Item) | |

| if ( | |

| migration.lastMigration !== undefined && | |

| migration.lastMigration < migrations.length | |

| ) { | |

| const migrationsToRun = migrations.slice(migration.lastMigration + 1) | |

| await Promise.all(migrationsToRun.map(migration => migration())) | |

| } | |

| await documentClient | |

| .put({ | |

| TableName: 'Management', | |

| Item: { | |

| id: 'migration', | |

| lastMigration: migrations.length - 1 | |

| } | |

| }) | |

| .promise() | |

| } | |

| prepareDB() |

It is a simple implementation of migrations mechanism :) If you would like to know about any other topic related to this, please let me know!