Finding Hidden Comic Book Deals: A TypeScript Scraping Solution

The Value Challenge in Comic Book Collecting

Comic book collectors face a common challenge: getting the best value for their money. While the cover price is straightforward, the actual value can vary significantly when you consider the number of pages in each volume. A $20 comic might contain 100 pages, while another at the same price point offers 400 pages. To solve this practical problem, we'll develop a TypeScript program that calculates and compares the price per page, helping collectors make data-driven purchasing decisions. The complete source code for this project is available on GitHub.

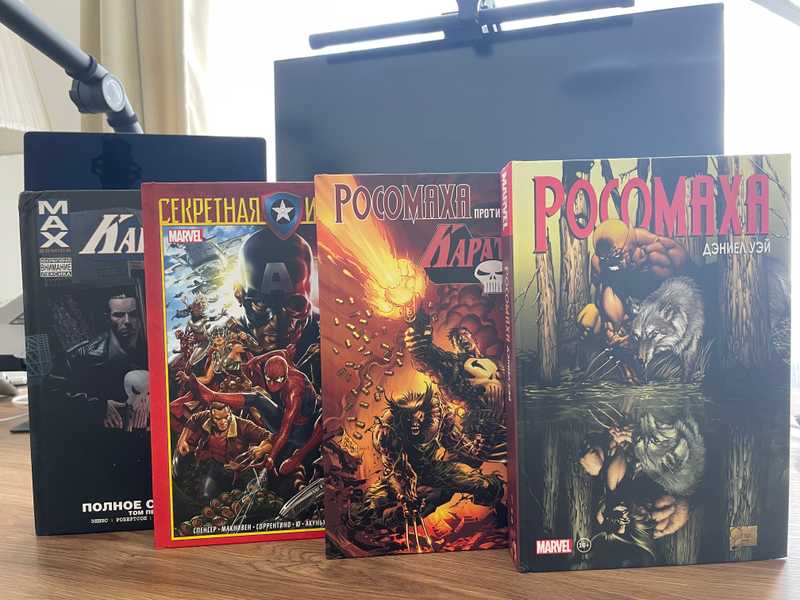

Using this approach, I bought four 400-plus-page comics for $85 total, including shipping costs. The implementation focuses on the Wildberries e-commerce platform, which serves Eastern European markets. The code structure is modular, allowing for easy adaptation to other online retailers by modifying the web scraping functions.

Defining the Data Model

Our data model represents each comic book with a TypeScript interface containing four essential properties: name, price, number of pages, and product URL. The getBookPagePrice function uses this structure to calculate the cost per page, our key metric for comparison.

export type Book = {

name: string

price: number

numberOfPages: number

url: string

}

export const getBookPagePrice = ({

price,

numberOfPages,

}: Pick<Book, "price" | "numberOfPages">): number => {

return price / numberOfPages

}

export const printBook = (book: Book) => {

return [

`Name: ${book.name}`,

`Price: ${book.price}`,

`Number of pages: ${book.numberOfPages}`,

`Price per page: ${getBookPagePrice(book).toFixed(2)}`,

`URL: ${book.url}`,

].join("\n")

}Managing Browser Lifecycle

For web scraping with Puppeteer, we'll create a withBrowser helper function that handles browser lifecycle. Our main findBooks function will receive a browser instance from it and focus on the scraping logic, while withBrowser takes care of proper browser cleanup.

import { Browser, launch } from "puppeteer"

type WithBrowserFn<T> = (browser: Browser) => Promise<T>

export const withBrowser = async <T>(fn: WithBrowserFn<T>) => {

const browser = await launch({

headless: true,

args: ["--no-sandbox", "--disable-setuid-sandbox"],

})

try {

return await fn(browser)

} finally {

await browser.close()

}

}Page Lifecycle Management

To handle browser page lifecycle management, we'll implement a higher-order function that encapsulates page creation and cleanup. The makeWithPage function takes a URL and browser instance as input, returning a function that manages the page's lifecycle. This pattern ensures proper resource cleanup and standardizes page configuration across our scraping operations.

import { Page, Browser } from "puppeteer"

type WithPageFn<T> = (browser: Page) => Promise<T>

type MakeWithPageInput = {

url: string

browser: Browser

}

export const makeWithPage = ({ url, browser }: MakeWithPageInput) => {

const withPage = async <T>(fn: WithPageFn<T>) => {

const page = await browser.newPage()

await page.setViewport({ width: 1280, height: 800 })

await page.setUserAgent(

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36",

)

await page.goto(url, { waitUntil: "networkidle2" })

try {

return await fn(page)

} finally {

await page.close()

}

}

return withPage

}Scraping Search Results

To extract product links from the search results page, we need to handle infinite scroll pagination and wait for all products to load. While Wildberries does provide pagination controls, we intentionally focus only on the first page since it contains the most relevant results sorted by popularity. The scrapeSearchPage function implements this by recursively scrolling to the bottom of the first page until no new products appear. Once all products are loaded, it extracts the URLs using DOM selectors. This approach ensures we capture the most relevant comic books from the search results before proceeding with individual product page scraping.

import { sleep } from "@lib/utils/sleep"

import { Page } from "puppeteer"

const productLinkSelector = ".product-card-list .product-card__link"

export async function scrapeSearchPage(page: Page): Promise<string[]> {

console.log(`Scraping search page: ${page.url()}`)

const recursiveScroll = async (pageCounts: number[]) => {

const currentProductCount = await page.evaluate(

(selector) => document.querySelectorAll(selector).length,

productLinkSelector,

)

if (pageCounts.find((count) => count === currentProductCount)) {

return

}

await page.evaluate(() => window.scrollTo(0, document.body.scrollHeight))

await sleep(1000)

await recursiveScroll([...pageCounts, currentProductCount])

}

await recursiveScroll([])

const bookLinks = await page.evaluate((selector) => {

return Array.from(document.querySelectorAll(selector))

.map((link) => link.getAttribute("href"))

.filter((href): href is string => href !== null)

}, productLinkSelector)

if (bookLinks.length === 0) {

throw new Error(`No books found on ${page.url()}`)

}

console.log(`Found ${bookLinks.length} books on ${page.url()}`)

return bookLinks

}Extracting Comic Book Details

With the product links collected, we now need to visit each page and extract the comic book details. The scrapeBookPage function navigates the DOM to find the title, price, and page count for each comic. It uses Puppeteer's evaluation methods to extract text from specific selectors and includes error handling to maintain data integrity. The price extraction handles the formatting challenges of converting display text to numerical values. For finding the page count, the function scans all page elements for text containing "страниц" (pages in Russian) and uses regex to extract the number.

import { attempt } from "@lib/utils/attempt"

import { Page } from "puppeteer"

const headerSelector = ".product-page__header h1"

export async function scrapeBookPage(page: Page) {

const header = await attempt(

page.waitForSelector(headerSelector, { timeout: 5000 }),

)

if ("error" in header) {

throw new Error(`Product header not found for ${page.url()}`)

}

const productName = await page.$eval(

headerSelector,

(el: Element) => el.textContent?.trim() || "",

)

if (!productName) {

throw new Error(`Could not extract product name for ${page.url()}`)

}

const priceText = await page.$eval(

".price-block__final-price",

(el: Element) => el.textContent?.trim() || "",

)

const priceMatch = priceText.match(/[\d\s,.]+/)

const price = priceMatch

? parseFloat(priceMatch[0].replace(/\s+/g, "").replace(",", "."))

: 0

if (price === 0) {

throw new Error(`Could not extract valid price for ${page.url()}`)

}

const numberOfPages = await page.evaluate(() => {

for (const element of document.querySelectorAll("*")) {

const text = element.textContent?.trim() || ""

if (text.includes("страниц")) {

const pagesMatch = text.match(/(\d+)\s*страниц/)

if (pagesMatch && pagesMatch[1]) {

return parseInt(pagesMatch[1], 10)

}

}

}

})

if (!numberOfPages) {

throw new Error(`Could not find page count for ${page.url()}`)

}

return {

name: productName,

price,

numberOfPages,

url: page.url(),

}

}Orchestrating the Scraping Process

Now let's build the main function that orchestrates our entire scraping operation. The code below connects all components into a complete workflow: it constructs search URLs with price filters, extracts product links from search results, processes these URLs in batches to prevent memory issues, and finally sorts comics by price per page. The implementation handles errors at each step, ensuring the program continues even when individual requests fail. By processing multiple search queries for different comic series and using a batched approach, we avoid rate limiting while efficiently gathering data from numerous product pages.

import { order } from "@lib/utils/array/order"

import { toBatches } from "@lib/utils/array/toBatches"

import { withoutDuplicates } from "@lib/utils/array/withoutDuplicates"

import { withoutUndefined } from "@lib/utils/array/withoutUndefined"

import { attempt } from "@lib/utils/attempt"

import { getErrorMessage } from "@lib/utils/getErrorMessage"

import { chainPromises } from "@lib/utils/promise/chainPromises"

import { addQueryParams } from "@lib/utils/query/addQueryParams"

import { Browser } from "puppeteer"

import { getBookPagePrice, printBook } from "./Book"

import { makeWithPage } from "./scrape/makeWithPage"

import { scrapeBookPage } from "./scrape/scrapeBookPage"

import { scrapeSearchPage } from "./scrape/scrapeSearchPage"

import { withBrowser } from "./scrape/withBrowser"

const searchStrings = [

"бэтмен комиксы",

"люди икс комиксы",

"сорвиголова комиксы",

"капитан америка комиксы",

"дэдпул комиксы",

]

const maxResultsToDisplay = 20

const batchSize = 5

const minPrice = 40

const maxPrice = 100

const findBooks = async (browser: Browser) => {

const searchUrls = searchStrings.map((searchString) =>

addQueryParams(`https://www.wildberries.ru/catalog/0/search.aspx`, {

page: 1,

sort: "popular",

search: encodeURIComponent(searchString),

priceU: [minPrice, maxPrice].map((v) => v * 100).join(";"),

foriginal: "1",

}),

)

const bookUrls = withoutDuplicates(

(

await chainPromises(

searchUrls.map((url) => async () => {

const result = await attempt(

makeWithPage({ url, browser })(scrapeSearchPage),

)

if ("error" in result) {

console.error(getErrorMessage(result.error))

return []

}

return result.data

}),

)

).flat(),

)

console.log(`Found ${bookUrls.length} books total`)

const batches = toBatches(bookUrls, batchSize)

const books = withoutUndefined(

(

await chainPromises(

batches.map((batch, index) => () => {

console.log(`Scraping batch #${index + 1} of ${batches.length}`)

return Promise.all(

batch.map(async (url) => {

const result = await attempt(

makeWithPage({ url, browser })(scrapeBookPage),

)

if ("error" in result) {

console.error(getErrorMessage(result.error))

return

}

return result.data

}),

)

}),

)

)

.flat()

.flat(),

)

const sortedBooks = order(books, getBookPagePrice, "asc").slice(

0,

maxResultsToDisplay,

)

console.log("Top Deals:")

sortedBooks.forEach((book, index) => {

console.log(`${index + 1}. ${printBook(book)}`)

console.log("---")

})

}

withBrowser(findBooks).catch(console.error)Conclusion

This practical approach to comic book shopping demonstrates how a simple TypeScript application can transform raw data into actionable insights, helping collectors maximize value while building their collections.